The Ancient Greek Gods sadistically acknowledged Prometheus for giving fire to the humans. Democratization of fire was met with fierce opposition from those who controlled it: the other Gods. These Uber-creatures chained the individual Titan, a god of lesser stature, onto a rock so that a symbol of power and might could infinitely eat his liver: Zeus’ emblematic eagle.

The eagle was attracted to the magnetism of Prometheus’ liver. The symbols are imposing and heavy. In contrast, the potential interpretative coherence is elegantly following Brownian non-motifs: randomness or arbitrariness. The audience to this theatrical display of abuse are both human and those other godlike creatures. Observing the plight of Prometheus, both sentient sets might now be convinced to mute any ethical concern or dissent. One would not want to suffer what Prometheus is suffering.

A fun fact is that the story, its nascence(s), and its iterations are “controlled,” again in a Brownian manner, by collectives of humans alone. No Gods were harmed in the making of this story. A story in the making it still is nevertheless.

An abrupt intermezzo as a short interconnecting move: the humans who wrote this Greek story might have been as disturbing as the persons concocting any technology that is intended at rendering humans mute under the candy-flag of democratizing access to technological magic, sparkles, and meaning-making. At least both authors are dealing with whimsical-ness of themselves or with the fancifulness they think to observe and hope to control with their form of storytelling: text as technology, and technology creating text. Where lie the nuances which could be distinguishing or harmonizing the providers of technology with the authors of Prometheus?

The low hanging fruit is as often the plucking of simple polarizations such as past versus present, or fictional versus factual. And yet, nuancing these creates a proverbial gradience or spectrum: there is no “versus,” there is verse. The nuance is the poetry and madness we measure and journey together. The story is the blooming of relations with the other in the past, the now and the future.

Prometheus is crafted and hyped as the promoter of humanity. So too are they who create and they who bring technologies into the world. Their hype is as ambrosia. Yet here the analogy starts to show cracks. Prometheus was not heralded by the story-encapsulated Maker (i.e. Zeus). Only questionably Prometheus was heralded by the actual maker: the human authors of the story.

The innovations in the story were possibly intended not to be democratized, for instance: fire, and more so, the veiling of human authorship, and the diluting of agency over one’s past acts (e.g. Prometheus transferring fire). These innovative potentials (desirable or not) were fervently hidden and strategically used when hierarchical confirmations were deemed necessary. What is today hung from the pillory and what is hidden from sight? What is used as distraction by inducements of fear (of pain), bliss or a more potent mix thereof?

Zeus was hidden from sight. His User Interface (UI) flew in when data was needed to be collected. The pool of data was nurtured as was the user experience (UX): Prometheus’ liver grew back every night. In some technologies the character of the Wizard of Oz (which is as if Zeus hiding behind the acts of his eagle) is used to discuss how users were tricked in thinking that the technology is far more capable than what it actually is. In some cases there are actual humans at play, as if ghosts in the machine, flying in from a ubiquitous yet hidden place.

For the latter one can find examples, as well as for: people filtering content as if a bot catering to the end-user (making the user oblivious of the suffering such human filterer experiences); people not consenting to their output being appropriated into dehumanized databases; a linguistic construct and Q&A sliding a human into confusing consciousness, sentience or artistry as existent at genius levels in the majority of humans or in the human-made technology as much as in the human user. This could be perceived as a slow metamorphosis toward the making of anthropomorphic dehumanization. Indeed, one can dismiss this flippantly by asking: ” what makes a human, human? Surely not only this nor that, …nor that, nor…”

Democratization is not that of technology (alone). In line with the thinking of technology as democratizing; e.g. (as some are claiming) democratizing “art” via easily accessible technological *output,* one can then in extension as well argue that delusion is more easily democratized. Delusion of being serviced (while being used as data source and as if being a product offloading platform), of being cared for (while turned into a statistic); of being told to be amazing (rather than being touched by wonder, open inquiry, and duty of care); of being told to be uniquely better (rather than increasing being part of relational life with others as opposed to positioned above others); and the delusion of being in control of one’s input and output (yet being controlled); of having access, and so on. In this flippant manner of promoting a technology, democratization is promoted via technologies as analogous to stale bread and child-friendly games.

The idea of access is central: access to service, amazement, uniqueness, geniuses, and cultural heritages (especially those one does not consider one’s own). A pampered escapism.

The latter is especially intriguing when observing the Diffusion Models anyone can access which are based on billions of creations by humans that came before us or that even now still roam among us. (e.g. https://beta.dreamstudio.ai/home or https://www.midjourney.com/home/ ).

This offers a type of access to any set of words transformed into any visual. This offers a type of access without those being accessed having any knowledge of the penetration: the artist’s work stored in the databases. Come to think of it, it is not only the artist and rather it is any human utterance and output stored in any database. With these technologies, as if the liver of Prometheus, the human echoes are not accessed with the consent of their creators. “Democracy,” as access for all, by the beak of Zeus’ eagle. Is that democracy or is that more like unsolicited “access” to a debilitating drug slipped into one’s drink? At least Prometheus felt it when he was picked for his liver.

In closing:

The awesome and tricking power of story is that one and the same structural story can drape various types of functional intent, leaving meaning as opaque and deniable. Yes, so too is this story here. So too is Prometheus’ story as well as the stories of technologies, such as the story of Diffusion models and the word-to-visual technologies derived from this.

Transformation of history as a diffusible amalgamation via stable diffusion technologies is taking human artifacts as Promethean livers to be picked, regrown and picked again. This is irrespective of the proverbial or actually experienced “pleasurable” pain it keeps on giving. These technologies are promoted as democratizing. It isn’t because I state that my technology is democratizing that it actually was intended to be, that it turns out to be, or that it is applied to be democratizing. Moreover, if this is the depth of democracy, to blindly take what came before, one might want to reconsider this Brownian interpretation of the story of democracy.

The magnetism of life hinted at in human expressions can be borrowed, adapted, and adopted. We learn from the others if we know what it is they have left us to build upon. We can innovate if we understand or are enabled to understand over time what it is that is being transformed. And yet, the nuance, reference and elegance with which it could be considered to be done allows for consciousness, discernment and awareness to be communicated, related and nurtured.

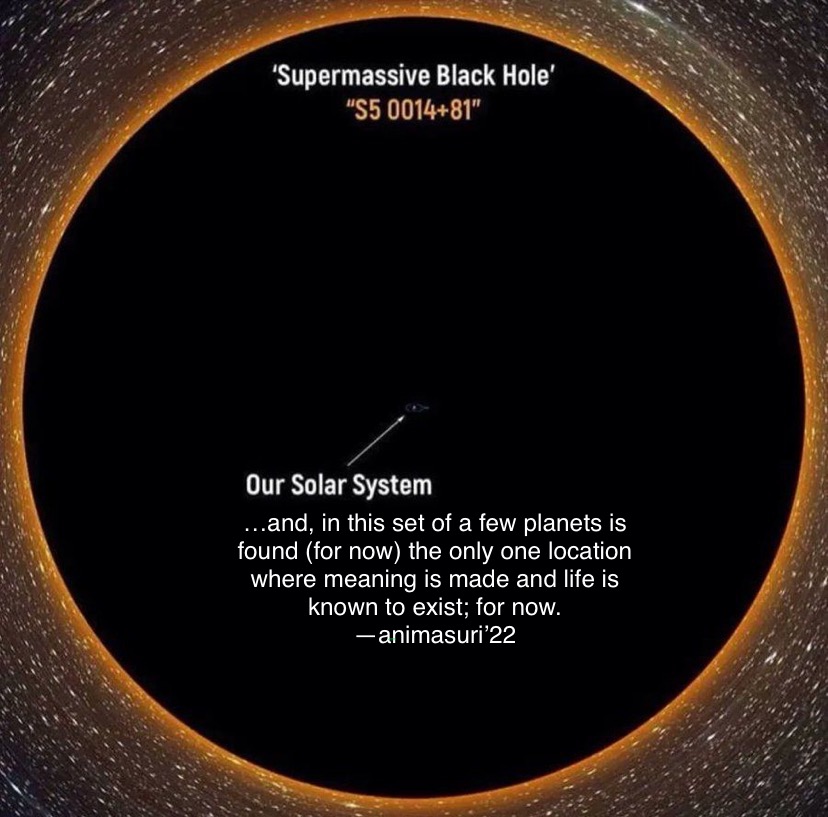

At present the vastness and opaqueness of the databases, within which our data are gluttonously stored, do not yet allow this finesse. While the stories they reinterpret and aggregate could be educational, stimulating and fun, we might want to consider the value-adding meaning-making randomness outside of that of expert designers, into the hands of the masses. Vast technology-driven access is not synonymous to democratization. Understanding and duty of care are intricate ingredients as well for any demons in democracy to be kept at bay.