artist & source

“AI systems that are incredibly good at achieving something other than what we really want … AI, economics, statistics, operations research, control theory all assume utility to be exogenously specified” — Russell, Stuart 2017

“AI systems that are incredibly good at achieving something other than what we really want … AI, economics, statistics, operations research, control theory all assume utility to be exogenously specified” — Russell, Stuart 2017

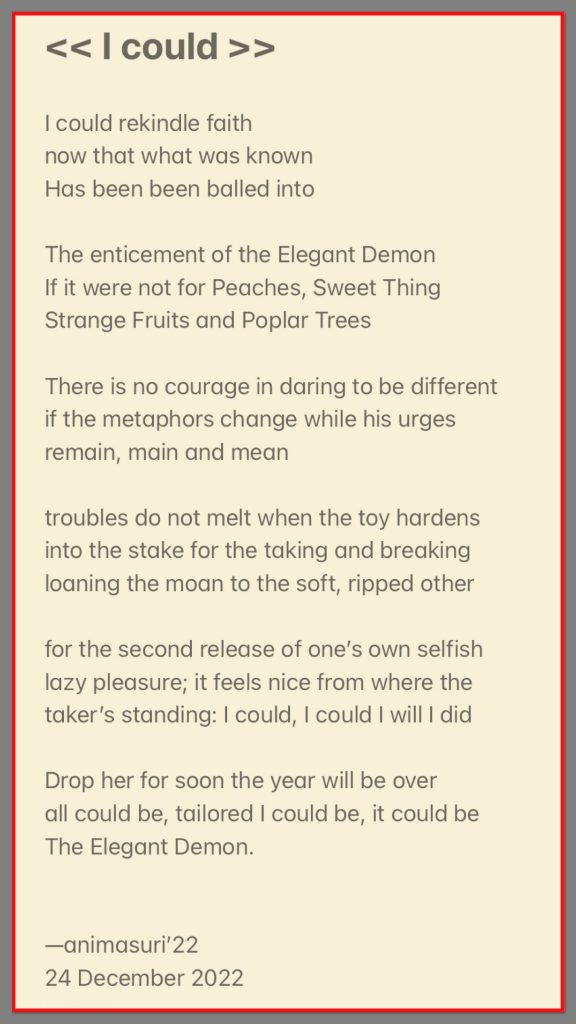

I could rekindle faith

now that what was known

Has been been balled into

The enticement of the Elegant Demon

If it were not for Peaches, Sweet Thing

Strange Fruits and Poplar Trees

There is no courage in daring to be different

if the metaphors change while his urges remain, main and mean

troubles do not melt when the toy hardens

into the stake for the taking and breaking

loaning the moan to the soft, ripped other

for the second release of one’s own selfish lazy pleasure; it feels nice from where the taker’s standing: I could, I could I will I did

Drop her for soon the year will be over

all could be, tailored I could be, it could be

The Elegant Demon.

—animasuri’22

24 December 2022

Beijing

Engineering —in a naive, idealized sense— is different from science in that it creates (in)tangible artifacts, as imposed & new realities, while answering a need

It does so by claiming a solution to a (perceived) problem that was expressed by some (hopefully socially-supportive) stakeholders. Ideally (& naively), the stakeholders equal all (life), if not a large section, of humanity

Who’s need does ChatGPT answer when it aids to create malware?

Yes, historically the stakeholders of engineering projects were less concerned with social welfare or well-being. At times (too often), an engineered deliverable created (more) problems, besides offering the intended, actual or claimed solution.

What does ChatGPT solve?

Does it create a “solution” for a problem that is not an urgency, not important and not requested? Does its “solution” outweigh its (risky / dangerous) issues sufficiently for it to be let loose into the wild?

Idealized scientific methodology –that is, through a post-positivist lens– claims that scientific experiments can be falsified (by third parties). Is this to any extent enabled in the realm of Machine Learning and LLMs; without some of its creators seen blaming shortcomings on those who engage in falsification (i.e., trying to proverbially “break” the system)? Should such testing not have been engaged into (in dialog with critical third parties), prior to releasing the artifact into the world?

Idealized (positivist) scientific methodology claims to unveil Reality (Yes, that capitalized R-reality that has been and continues to be vehemently debated and that continues to evade capture). The debates aside, do ChatGPT, or LLMs in general, create more gateways to falsity or tools towards falsehood, rather than toward this idealized scientific aim? Is this science, engineering or rather a renaissance of alchemy?

Falsity is not to be confused with (post-positivist) falsification nor with offering interpretations, the latter which Diffusion Models (i.e., text2pic) might be argued to be offering (note: this too is and must remain debatable and debated). However, visualization AI technology did open up yet other serious concerns, such as in the realm of attribution, (data) alienation and property. Does ChatGPT offer applicable synthesis, enriching interpretation, or rather, negative fabrication?

Scientific experiment is preferably conducted in controlled environments (e.g., a lab) before letting its engineered deliverables out into the world. Realtors managing ChatGPT or recent LLMs do not seem to function within the walls of this constructed and contained realm. How come?

Business, state incentives, rat races, and financial investments motivate and do influence science and surely engineering. Though is the “democratization” of output from the field of AI then with “demos” in mind, or rather yet again with ulterior demons in mind?

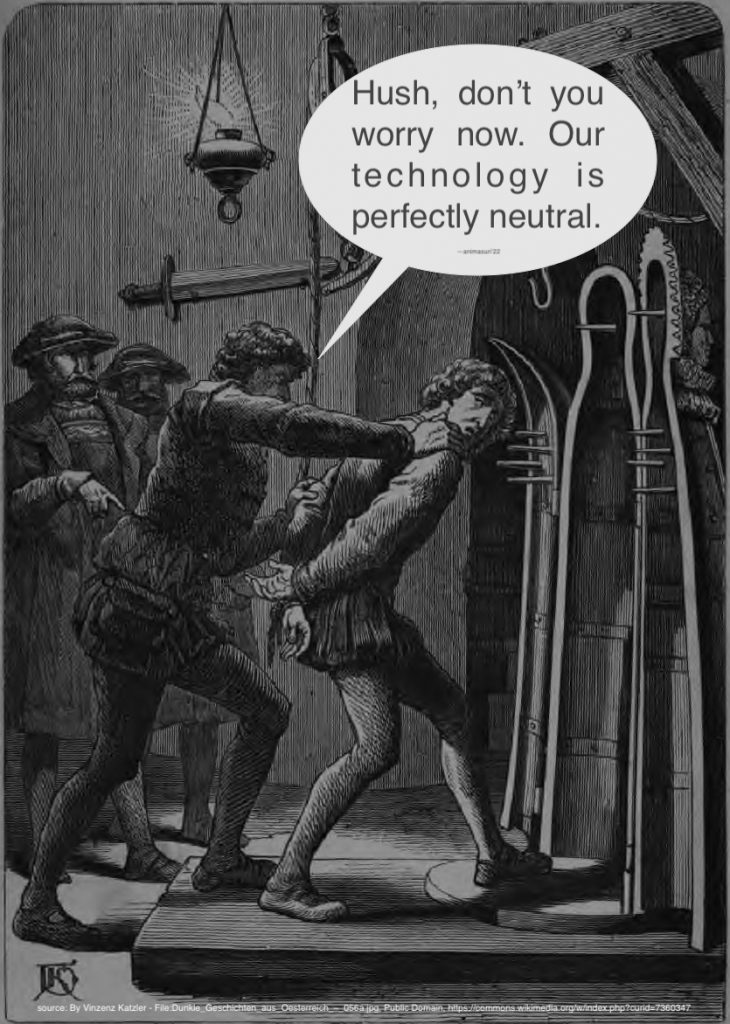

Is it then too farfetched to wonder whether the (ideological) attitudes surrounding, and the (market-driven) release of, such constructs is as if a ware with hints, undertones, or overtones, of maliciousness? If not too outlandish an analogy, it might be a good idea to not look, in isolation, at the example of a technology alone.