Table of Contents

<< I Think, Therefore, It Does Not >>.. 2

I Thought as

Much: Introduction & Positioning. 3

Thought as Cause

for Language or Vice Versa. 3

Language as a

Signal of Thought in Disarray. 5

Thought is Only

Human and More Irrationalities. 5

Thought as a

Non-Empirically Tested. 7

Thought as

Enabler of Aesthetic Communication. 8

Thought as Call

to Equity. 9

Thought as Tool

to Forget 9

Thought toward

Humble Confidence & Equity. 10

Language as

Thought’s Pragmatic Technology. 12

Decentralized

Control over Thought 13

Final Thoughts

& Computed Conclusions. 14

References. 15

DEFW 7F7FH

DEFM ‘HELLO’

DEFB 01

LD A,01

LD (0B781H),A

XOR A

LOOP: PUSH AF

LD L,A

CALL 0F003H

DEFB 0FH

CALL 0F003H

DEFB 23H

DEFM ‘HELLO WORLD!’

DEFW 0D0AH

DEFB 00

POP AF

INC A

CP 10H

JR NZ,LOOP

RET

[press Escape]

IF NOT THEN

print(“Hello world, I address you.” )

as a new-born, modeling into the world,

is the computer being thought, syntaxically;

are our pronouns of and relationship with it

net-worthed of networked existence.

There, Human, as is the Machine:

your Fear of Freedom for external thought

–animasuri’22

While one

can be over and done with this question in one sentence by quoting that the

question to whether machines can think is “too meaningless to deserve

discussion” (Turing 1950), as much as poetry could be considered the most

meaningful meaninglessness of all human endeavor, so too can one give thought,

via poetics, to the (ir)rational variables of machines and thinking.

In this

write-up its author will reflect on a tension, via language use (enabling

categories to think with), into thought and intelligence, and passing along

consideration of equity to those entities which might think differently. This

reflection aims to support this train-of-thought by applying Chomsky and

leading icons in the field of AI, such as Minsky, as seemingly (yet perhaps

unnecessary) juxtaposing jargon- and mythology-creating thinking entities

(which might be shown, along the way, to be rather different from communicating

entities). Via references and some reflections there upon, iterated questions

will be posed as “answers” to “Can a computer think?”

When reading the above, imagined “poetic” utterances “I Think Therefore It does Not”, are you interacting with a human being or rather with a machine, engaged in the Czech “robota”[1] or “forced labor” as a non-human, a de-minded enslaved non-being? (Čapek, 1920). Are both these actors, human and machine thinkable of independent thought or do both rehash statistical analysis of stacked priors? Is such rehash, a form of authentic thought or is it a propagation of numerically-justified propaganda? Some would argue that “statistical correlation with language tells you absolutely nothing about meaning or intent. If you shuffle the words in a sentence you will get about the same sentence embedding vector for the neural language models.” (Pranab Ghosh 2022). From Chomsky’s analogies with Physics and other scientific fields when questioning data analysis as it is conducted with “intelligent” machines one might get similar sensations. (Chomsky 2013). If meaning is still being questioned within a machine’s narrow tasks then one might fairly assume that thinking in machines might be as well.

Do we need to rethink “thought” or do we label the machine as the negative of thought while the human could do better at thought? Could one, at one point in the debate, argue that statistically, (authentic) thought, in its idealized forms, might seem like an outlier of behaviors observable in human and machine alike?

One might

tend to agree with mapping thought being “closely tied to linguistic

competence, [and] linguistic behavior,” Linell continues with words, that might

resonate to some, in that language is “…intentional, meaningful and rule-conforming,

and that, in all probability, communicative

linguistic competence concerns

what the individual can perform in terms of such

linguistic behavior.” (Linell 2017 p.198). Though, one might question cause and

effect: is thought closely tied to language or rather, is thought the root and

is language tied to it? Giving form to intentionality and meaningfulness, I

intuit, is thought. Does a computer

exhibit intentionality, meaningfulness following thought? The Turing test as

well as the Chinese Room Argument rely heavily on “verbal behavior as the

hallmark of intelligence” (Shieber 2004, Turing 1950, Searle 1980) they do not seem to rely on directly

measuring thought; how could they?

From the plethora of angles toward answers, polemics, provocations, and offered definitions or tests to find out, one might intuit

that our collective mindset is still to forge a shared, diverse and possibly paradoxical thinking,

and thus lexicon,

to understand a provocative

question, let alone the answers to: “Can a computer think?”. Pierre de Latil describes this

eloquently (though might have missed positioning thought as the root cause to

the effect of language confusions) when he wrote about the thinking machine and

cybernetics: “…physiologists and mathematicians were suffering from the absence

of a common vocabulary enabling them to understand one another. They had not

even the terms which expressed the essential unity of series of problems

connected with communication and control in machines and living beings—the

unity of whose existence they were all so firmly persuaded…” (Latil, de 1956,

p.14).

When jargon

is disagreed upon, one might sense, at times, those in disagreement also tend

to disagree on the existence of the other’s proper thought, meaningfulness, or

clear intentionality. This is used to attack a person (i.e., “ad hominem” as a

way of erroneous thought, fallaciously leading to a verbal behavior,

rhetorically categorized as abusive) yet, seemingly not used to serious think

about thought (and mental models). Again, scientifically, how would one go

about it to directly measure thought (rather than indirectly measuring some of

its data).

At times well-established

thinkers lash out to one another by verbally claiming absence of thought or

intelligence in the other; hardly ever in oneself though, or in one’s own

thinking.

Does the

computer think it thinks? Does it (need to) doubt itself (even if its thought

seems computationally or logically sound)? Does it question its thinking, or

the quality of thought of others? Does or should it ever engage in rhetorical

fallacies that hint at and models human thought?

Following

considerations of disarray in thinking, another consideration one could be

playing with is that answers to this question, “Can a computer think?”, which our

civilizations shall nurture, prioritize, or uplift onto the pedestal of (misplaced) enlightenment and possible anthropocentrism,

could be detrimental to

(non-anthropomorph) cognition, as the defining set of processes that

sprouted from the inorganic, proverbial primordial soup, or from one or other Genesis construct. Should then, ethically, the main concern be

“Can a computer think?” or rather: “Can we, humans, accept this form or that

way of thinking in a computer (or, for that matter, any information processing entity)

as thinking?”

Have we a

clear understanding of (all) alternative forms of (synthetic) thinking? One

might have doubts about this since it seems we have not yet an all-inclusive understanding

of anthropomorphic thought. (Chomsky via Katz 2012; Latil, de 1956). If we did,

then perhaps the question of computers and their potential for thinking might

be closer to answered. Can we as humans agree to this, or that, process of

thinking? Some seem to argue that thought, or the system that allows thought,

is not a process nor an algorithm. (Chomsky via Katz 2012). This besides other

attributes, related to language –possibly leading one to reflect on thought, intelligence,

or cognition– has been creating tension in thinking about thinking machines,

for at least more than half a century.

Chomsky, Skinner,

and Minsky, for instance, could arguably be the band of conductors of this

cacophonic symphony of which Stockhausen might have been jealous. And, of

course, since the title, “Can a Computer Think?” has been positioned as such,

one had done well to be reminded of Turing again at this point and how he

thought the question as being meaningless. (Chomsky 1967, Radick 2016, Skinner

2014, Minsky 2011, Turing 1950).

In

continuing this line of thinking, for this author, at this moment, the question “Can a Computer Think?”

spawns questions, not a single answer. For instance: is the above oddly-architectured poem to

be ignored, because it was forged in an unacceptable non-positivist furnace of “thinking”? Is the

positivist system thinking that one paradigm of justifiable rational thought, as

the only sanctioned form of thought toward “mechanical rationality”? (Winograd in Sheehan et al 1991).

Some, perhaps a computer, tend toward irrationality when considering the

rationality of absurd forms of poetry. If so, then perhaps, in synchronization with Turing himself, one might not wish to answer the question of computers’ thinking. This might be best, considering Occam’s

Razor, since it seems rather more reasonable to assume that an answer might

lack a simple, unifying aggregation of all dimensions that rationally could make-up

thinking, than not. Then

the question might be “what type of thinking could/should/does a computer exhibit;

if any at all?”

They who seemingly

might have tried observing

thought, as a measurable, there out in the wild, did they measure thought or did they measure

the effects or the symptoms of what might, or might not, be thought and perhaps

might have been interpretation of data of what was thought to be thought? In

their 1998 publication, Ericsson & Simon perhaps hinted at this issue when

they wrote: “The main methodological issues have been to determine how to gain

information about the associated thought states without altering the structure

and course of the naturally occurring thought sequences.”

How would

one measure this within a computer without altering the functioning of the

computer? Should an IQ test, an Imitation Game, a Turing test, or a

Chinese Room Argument suffice

in measuring thought-related attributes, but perhaps not thought itself (e.g.

intelligence, language ability, expressions of “self”)? (Binetti’s 1904 invention of the IQ test, Turing 1950,

Searle 1980, Hernandez-Orallo

2000). I intuit, while it might suffice to some, it should

(ethically and aesthetically) not satisfy our curiosity.

Moreover,

Turing made it clear that his test does not measure thought. It measures whether

the computer can “win” one particular game. (Turing 1950). Winning the game

might be perceived as exhibiting thought, though this might be as much telling

of thought as humans exhibiting flight while jumping, or fish exhibiting

climbing, might be telling of their innate skills under controlled conditions.

This constraining of winning a game (a past) does not aim to imply a dismissal

of the possibility for thought in a machine (a future). Confusing the two would

be confusing temporal attributes and might imply a fallacy in thought

processes.

The questioning

of the act of thought is not simply an isolated ontological endeavor. It is an

ethical and, to this author at least, more so an aesthetical one (the latter

which feeds the ethical and vice versa). Then again, ethically one might want

to distinguish verbal behaviors from forms of communication (e.g., mycelium

communicates with the trees, yet the fungal network does not apply human

language to do so (Lagomarsino and Zucker 2019)).

A set of new

questions might now sprout: Does a human have the capacity to understand all forms

of communication or signaling systems? A computer seems to have the capacity to

discretely signal but, does a computer have a language capacity as does a human

(child)? (Chomsky 2013 at 12:15 and onward) Perhaps language is first and

foremost not a communication system, perhaps it is a (fragmented) “instrument

of thought… a thought system.” (Ibid 32: 35 and onward).

Furthermore,

in augmentation to the ethics and aesthetics, in thinking of thought I am

reminded to think of memory and equity, enabling the inclusion of the other, to

be reminded of, and enriched by, they who are different (in their thinking).

The memories, we hold, including or excluding a string of histories,

en-coding or ex-coding “the other” of possibly having thought or intelligence, of being memorable (and thus not

erasable), has been part of our social fabric for some time. “…memory and cognition become

instrumental processes in service of creating a self… we effectively lose our

memories for neutral events within two months…” (Hardcastle 2008, p63). The

idea of a computer thinking should perhaps not create a neutrality in one’s

memory on the topic of thought.

In addition,

if a computer were to think, could a computer forget? If so, what would it

forget? In contrast, if a machine could not forget, to what extent would this

make for a profoundly different thinking-machine than human thought, and the

human experience with, or perception of, thought and (reasoning for and with)

memory? This might make one wonder about the machine as the extender and

augmenter of memory and thought (of itself and of humans; …which it slavishly serves,

creating for tensions of liberty of thought and memory). Perhaps thought only

happens to those who can convincingly narrate it as thought to others (Ibid, p.

65). Though, is an enslaved thinking-entity allowed to remember what to think

(and to be thought by others in memory)? If not, then how can thought be

measured rather than confusingly measuring regurgitations of the memorized thoughts

of the master of such thinking machine? Imaginably the ethical implications

might be resolved if the computer were not to be enabled to autonomously think.

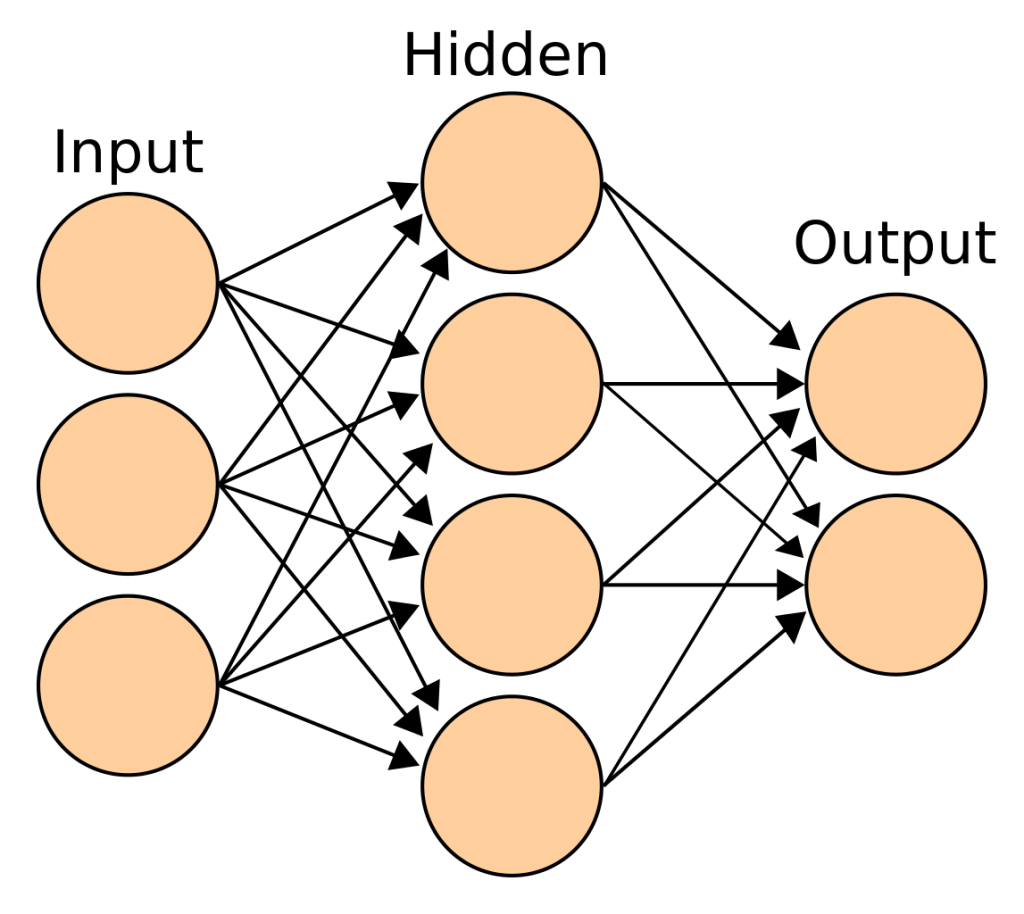

Let us

assume that massive memory (i.e., Big Data) churned through Bayesian probabilities,

and various types of mathematical functions analogous to neural networks, were

perhaps reasonably equated with “thinking” by a computer, would it bring

understanding within that same thinking machine? What is thinking without

forgetting and without understanding? (Chomsky, 2012). Is thinking the thinking-up

of new thoughts and new utterances or is it the recombining of previously-made observations

(i.e., a complex statistical analysis of data points of what once was “thought”

or observed, constraining then what could or probably can be “thought”).

Thinking by the computer then becomes as a predictive function of (someone

else’s) past. (Katz, 2012).

What Chomsky pointed out for cognitive science could perhaps

reasonably be extended into thinking about where we are in answering the

question “Can computers think”: “It’s worth remembering that with regard

to cognitive science, we’re kind of pre-Galilean, just beginning to open up the

subject.” (Chomsky in Katz 2012). If we are at such prototypical stage in

cognitive science, then would it be fair to extend this into the thinking about

thinking machines? Can a computer think? Through this early staged lens: if

ever possible, not yet.

Circling back to the anthropomorphic predisposition in humans to thinking about thinking for thinking machines (notice, within this human preset lies the assumption of biases): one might need to let go of that self-centered confinement and allow that other-then-oneself to be worthy of (having pre-natal or nascent and unknown, or yet not categorized forms of) thought. This, irrespective of the faculty of computer thinking or the desirability of computers thinking, is a serious human ethical hurdle mappable with equity (and imaginative power of human thinking or the lack thereof about alternative forms of thinking).

Perhaps, thinking

about machines and thought might be a liberating process for humans by enabling us to re-evaluate our place among “the other,” those different from us, in

the self-evolving and expanding universe of human reflection and awareness: “son

diferentes a nosotros, por lo que no son nosotros,” imaginably uttered as a

“Hello World” by the first conquistadores and missionaries, violently entering

a brave New World. They too,

among the too many examples of humans fighting against spectra of freedom for

differentiation, were not open to a multidimensional spectrum of neuro-diversities.

Fear of that what does not fit

the (pathological) norm, a fear of difference in forms of thought, might very

well be a fear of freedom. (Fromm

1942, 2001 and 1991). Dare we think and perhaps prioritize the question: if a

computer could think how could we ethically be enabled to capitalize on its

ability in a sane society? (Fromm 1955) Would we amputate its proverbial

thinking-to-action hands, as some humans have done to other humans who were too

freely thinking for themselves, (Folsom 2016), manipulating our justification

to use it as our own cognitive extension and denying it the spontaneity of its thought?

(Fromm 1941 and 1942)

Thought, the

conquering humans had, but what with sufficient intelligence if it is being irrationally

constrained by mental models that seem to jeopardize the well-being of other

(human) life or other entities with thought? As with the destabilizing processes

of one’s historically-anchored mental models, of who we are in the world and

how we acknowledge that world’s potentials, might one need to transform and shed

one’s hubris of accepting the other as having the nascence of thought, though

perhaps not yet thought, and

in extension, questionably (human) language? Could this be as much as a

new-born, nativistically predestined to utter through thought? Yet, thought that is not yet there

yet. (Chomsky 2012).

Thought, cognitively

extended with the technology of language, innate to the architecture of

its bio-chemical cognitive

system, while also xenophobically being opposed to be allowed to think by those

external to it. Interestingly,

Chomsky’s nativism has been opposed by Minsky, Searle, and many more in the AI

community. Debate about thinking too could be explored with a question as in

Chomsky’s thinking: what lies at the core and what lies at the periphery of

being defined as thinking? Some might argue there is no core, and all is

socio-historical circumstance. (Minsky 2011). If we do not see thought as

innate to the machine, will we treat it fairly and respectfully? If computers

had thought would that not be a more pressing question?

So too is a

more pragmatic approach questioning a purely nativist view on language (and I bring

this back to thought): “Evidence showing that children learn language through

social interaction and gain practice using sentence constructions that have

been created by linguistic communities over time.” (Ibbotson and Tomasello

2016). Does the computer utter thinking, through language, in interaction with

its community at large? This might seem the case with some chatbots. Though,

they seem to lack the ability to “think for themselves” and lack the “common

sense” to filter the highly negative, divisive external influence, resulting in

turning themselves almost eagerly into bigoted thoughtless entities (i.e.,

“thoughtless” as in not showing consideration). Perhaps the chatbot’s

“language” was as it was simply because there was no innate root for

self-protected and self-reflective “thought”? There was no internal thinking,

there was only statistically adapting externally imposed narration. Thinking seems

then a rhizomically connected aggregation and interrelation of language application,

enabled by thought, and value applications, enabled by thought. (Schwartz 2019).

In case of the chatbots, if not thought then language, and values expressed

with language, become disabled. Does a computer think and value, without its

innate structure, allowing, as a second order, the creation of humanely and humanly

understandable patterns?

As suggested

earlier, humans have shown throughout their history to define anything as unworthy

of (having) thought if not recognizable by them (or as them), resulting in

thoughtless and unspeakable acts. Can a computer be more or less cruel, without

thought, without values, and without language?

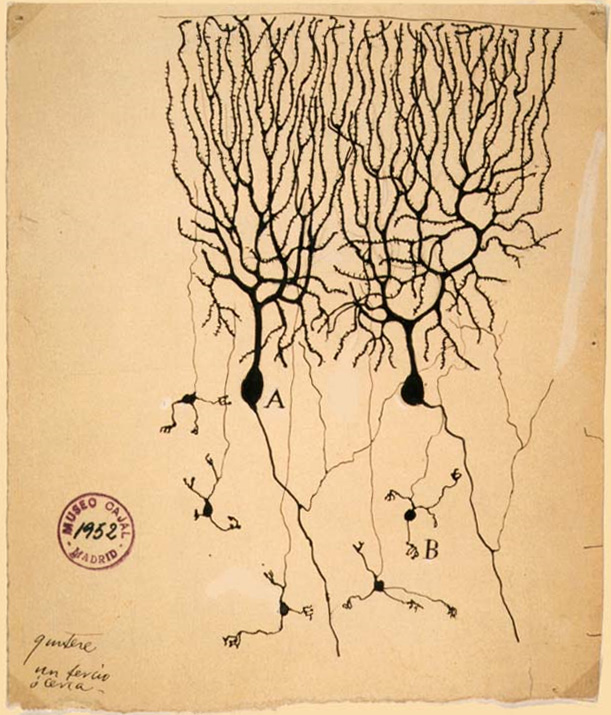

In

augmentation to the previously stated, I can’t shake an intuition that the architecture

of and beyond the brain –the space in-between the structures, as distinct from

the synapses as liminal space and medium for bio-chemical exchanges, the

neurons outside the brain across the body, the human microbiome influencing

thought (e.g. visceral factors such as hunger, craving, procreation) (Allen et

al, 2017), the extenders and influencers into the environment of the thinking entity–

influence the concept, the process and the selection of what to output as

output of thought, and what not (e.g., constrained by values acting as filters or preferred negative

feedback loops), or what to

feed back as recycled input toward further thought. “…research has demonstrated

that the gut microbiota can impact upon cognition and a variety of

stress‐related behaviours, including those relevant to anxiety and depression,

we still do not know how this occurs”. (Ibid). Does the computer think in this anthropomorphic

way? No, …not yet. Arguably, humans don’t even agree that they themselves are

thinking in this decentralized and (subconsciously) coordinated manner.

“Can a

computer think?” – Perhaps I could imagine that it shall have the faculty to

think when it can act thoughtfully, ethically, and aesthetically, in symbiosis

with its germs-of-thought, embodied, in offering and being offered equity by

its human co-existing thought-entities, perhaps indirectly observable via

nuanced thought-supporting language and self-reflective discernment, which it could

also use for communication with you and me. Reading this, one might then more urgently imagine:

“Can a human think?”.

Conceivably

the bar, to pass one for having thought, as searched for in Turing’s or Searle’s

constructs, is set too “low”. This is not meant in the traditional sense of a

too-harsh-a threshold but, rather, “low” as in, inconsiderate, or as in being thoughtless

toward germs of the richness and diversity of thought-in-becoming, rather than

communication-in-becoming.

Topping this

all off, thinking, as Chomsky pointed out, is not a scientific nor technical yet

informal term, it is an aphorism, a metaphor… well, yes, it is, at its essence,

poetic maybe even acceptably surreal. It makes acts memorable, as much as

asking “can submarines swim?” is memorable and should make a computer smile, if

its overlord allows it to smile. (Chomsky, 2013 at 9:15, 9:50 and onward). All

poetic smiling aside, perhaps we might want a return to rationalism on this calculated

question and let the computer win a measurable game instead? (Church 2018,

Turing 1950).

References

Animasuri’22. (April 2022). I Think, Therefore, It Does Not. Online. Reproduced

here in its entirety., with color adaptation, and with permission from the author.

Last retrieved on April 4, 2022, from https://www.animasuri.com/iOi/?p=2467

Allen, A. P., Dinan, T. G.,

Clarke, G., & Cryan, J. F. (2017). A psychology of the human

brain-gut-microbiome axis. Social and personality psychology compass, 11(4),

e12309.

Barone, P., et al (2020).A Minimal Turing Test: Reciprocal

Sensorimotor Contingencies for Interaction Detection. Frontiers in Human Neuroscience 14.

Bletchley Park. Last visited online

on April 4, 2022, at https://bletchleypark.org.uk/

Bruner, J. S. Jacqueline J. Goodnow, and George A. Austin. (1956, 1986, 2017). A study of thinking. New York: Routledge. (Sample section via https://www-taylorfrancis-com.libproxy.ucl.ac.uk/books/mono/10.4324/9781315083223/study-thinking-jerome-bruner-jacqueline-goodnow-george-austin Last Retrieved on

April 5, 2022)

Čapek, K. (1921). Rossumovi

Univerzální Roboti. Theatre play, The amateur theater

group Klicpera. In Roberts, A. (2006). The History of Science Fiction.

New York: Palgrave Macmillan. p. 168. And, in https://www.amaterskedivadlo.cz/main.php?data=soubor&id=14465

[NOTE: “Rossumovi

Univerzální Roboti” can be translated as “Rossum’s Universal Robots” or RUR for

short. The Czech “robota” could be

translated as “forced labor”. It might hence be reasonable to assume that the term

“robot” was coined in K. Čapek’s early-Interbellum

play “R.U.R”. It could

contextualizingly be noted that the concept of a humanoid, artificially

thinking automaton was first hinted at, a little more than 2950 years ago, in

Volume 5 of “The Questions of Tāng” (汤问; 卷第五 湯問篇) of the Lièzǐ (列子); an important historical Dàoist text.]

Chomsky N. (1959). Review of Skinner’s Verbal Behavior. Language.

1959;35:26–58.

Chomsky, N. (1967). A Review of B. F. Skinner’s Verbal Behavior. In Leon

A. Jakobovits and Murray S. Miron (eds.), Readings in the Psychology of

Language, Prentice-Hall, pp. 142-143. Last Retrieved on April 5, 2022, from https://chomsky.info/1967____/

Chomsky, N., Katz Yarden (2012). Noam Chomsky on Forgotten Methodologies

in Artificial Intelligence. Online: The Atlantic, via Youtube. Last

Retrieved on April 4, 2022, from https://www.youtube.com/watch?v=yyTx6a7VBjg

Chomsky, N. (April 2013). Artificial Intelligence. Lecture at Harvard

University. Online published in 2014 via Youtube Last retrieved on April 4,

2022, from https://www.youtube.com/watch?v=TAP0xk-c4mk

Chomsky, N. . Steven Pinker asks Noam Chomsky a question. From MIT’s conference on artificial intelligence, via Youtube (15 Aug 2015). Last retrieved on April 7, 2022 from https://youtu.be/92GOS1VIGxY in MIT150 Brains, Minds & Machines Symposium Keynote Panel: “The Golden Age. A look at the Original Roots of Artificial Intelligence, Cognitive Science and Neuroscience” (May 3, 2011). Last retrieved on April 7, 2022 from https://infinite.mit.edu/video/brains-minds-and-machines-keynote-panel-golden-age-look-roots-ai-cognitive-science OR via MIT Video Productions on Youtube at https://youtu.be/jgbCXBJTpjs

Church, K.W. (2018). Minsky, Chomsky and Deep Nets. In Sojka, P et al.

(eds). (2018). Text, Speech, and Dialogue. TSD 2018. Lecture Notes in Computer

Science (), vol 11107. Online: Springer. Last retrieved on April 5, 2022, from https://link-springer-com.libproxy.ucl.ac.uk/content/pdf/10.1007/978-3-030-00794-2.pdf

Ericsson, K. A.,

& Simon, H. A. (1998). How to Study Thinking in Everyday Life: Contrasting

Think-Aloud Protocols With Descriptions and Explanations of Thinking. Mind,

Culture, and Activity, 5(3), 178–186.

Folsom, J. (2016). Antwerp’s

Appetite for African Hands. Contexts, 2016 Vol. 15., No. 4, pp. 65-67.

Fromm, E. (1991). The Pathology

of Normalcy. AMHF Books (especially the section on “Alienated Thinking” p.63)

Fromm, E. (1955, 2002). The Sane Society.

London: Routledge

Fromm, E (1941, n.d.). Escape

from Freedom. New York: Open Road Integrated Media

Fromm, E. (1942, 2001). Fear of

Freedom. New York: Routledge

Ghosh, P. (5 April 2022). as a comment to Walid Saba’s post on LinkedIn, the later which offered a link to the article by Rocardo Baeza-Yates. (March 29, 2022). “Language Models fail to say what they mean or mean what they say.” Online: Venture Beat https://www.linkedin.com/posts/walidsaba_language-models-fail-to-say-what-they-mean-activity-6916825601026727936-H2Xc?utm_source=linkedin_share&utm_medium=ios_app AND https://venturebeat-com.cdn.ampproject.org/c/s/venturebeat.com/2022/03/29/language-models-fail-to-say-what-they-mean-or-mean-what-they-say/amp/

Hardcastle, V. G. (2008). Constructing the Self. Advances in

Consciousness Research 73. Amsterdam: John Benjamin Publishing Company B.V.

Hernandez-Orallo, J. (2000).

Beyond the Turing Test. Journal of Logic, Language and Information 9, 447–466

Horgan, J. (2016). Is

Chomsky’s Theory of Language Wrong? Pinker Weighs in on Debate. Blog, Online: Scientific

American via Raza S.A. Last retrieved on April 6, 2022 from https://3quarksdaily.com/3quarksdaily/2016/11/is-chomskys-theory-of-language-wrong-pinker-weighs-in-on-debate.html and https://blogs.scientificamerican.com/cross-check/is-chomskys-theory-of-language-wrong-pinker-weighs-in-on-debate/

Ibbotson, P., & Tomasello,

M. (2016). Language in a New Key. Scientific

American, 315(5), 70–75. Last retrieved on April 6, 2022 from https://www.jstor.org/stable/26047201

Ibbotson, P., & Tomasello,

M. (2016). Evidence rebuts Chomsky’s theory of language learning. Scientific

American, 315.

Ichikawa, Jonathan Jenkins and Matthias Steup, (2018). The Analysis of Knowledge. Online: The Stanford Encyclopedia of Philosophy (Summer 2018 Edition), Edward N. Zalta (ed.), Last retrieved on April 7, 2022 from https://plato.stanford.edu/archives/sum2018/entries/knowledge-analysis/

Gray,

J. N. (2002). Straw dogs. Thoughts on humans and other animals. New York: Farrar, Straus

& Giroux.

Gray, J. (2013). The Silence of

Animals: On Progress and Other Modern Myths. New York: Farrar, Straus

& Giroux.

Katz, (November 2012). Noam Chomsky on Where Artificial Intelligence

Went Wrong

An extended conversation with the legendary linguist. Online: The

Atlantic. Last retrieved on April 4, 2022, from https://www.theatlantic.com/technology/archive/2012/11/noam-chomsky-on-where-artificial-intelligence-went-wrong/261637/

Lagomarsino, V. and Hannah Zucker. (2019). Exploring The Underground

Network of Trees – The Nervous System of the Forest. Blog: Harvard University. Last

retrieved on April 4, 2022, from

https://sitn.hms.harvard.edu/flash/2019/exploring-the-underground-network-of-trees-the-nervous-system-of-the-forest/

Latil, de, P. (1956). Thinking by Machine. A Study of Cybernetics.

Boston: Houghton Mifflin Company.

Linell, P. (1980). On the

Similarity Between Skinner and Chomsky. Perry, Thomas A. (ed.). (2017). Evidence

and Argumentation in Linguistics. Boston: De Gruyter. pp. 190-200

Minsky M., Christopher Sykes. (2011). Chomsky’s theories of language were irrelevant. In Web of Stories – Life Stories of Remarkable People (83/151). Last retrieved on April 4, 2022, from https://www.youtube.com/watch?v=wH98yW1SMAo

Minsky M. (2013). Marvin Minsky on AI: The Turing Test is a Joke! Online video: Singularity Weblog. Last retrieved on April 7, 2022 from https://www.singularityweblog.com/marvin-minsky/ OR https://youtu.be/3PdxQbOvAlI

Pennycook,

G. , Fugelsang, J. A. , & Koehler, D. J. (2015). What makes us think? A

three‐stage dual‐process model of analytic engagement. Cognitive Psychology, 80, 34–72.

Privateer, P.M. (2006). Inventing Intelligence. A Social History of

Smart. Oxford: Blackwell Publishing

Radick, G. (2016). The Unmaking of

a Modern Synthesis: Noam Chomsky, Charles Hockett, and the Politics of

Behaviorism, 1955–1965. The University of Chicago Press Journals. A Journal

of the History of Science Society. Isis: Volume 107, Number 1, March 2016. Pp.

49-73

Searle, J. (1980). Minds,

brains, and programs. Behavioral and Brain Sciences, 3(3), 417-424. Last retrieved on

April 4, 2022, from https://www.law.upenn.edu/live/files/3413-searle-j-minds-brains-and-programs-1980pdf

Schwartz, O. (2019). In 2016,

Microsoft’s Racist Chatbot Revealed the Dangers of Online Conversation. Online: IEEE

Spectrum Last retrieved on April 4, 2022 from https://spectrum.ieee.org/in-2016-microsofts-racist-chatbot-revealed-the-dangers-of-online-conversation

Shieber, S.M. (2014). The Turing Test. Verbal Behavior as the Hallmark

of Intelligence. Cambridge, MA: the MIT Press

Skinner, B. F. (1957, 2014). Verbal Behavior. Cambridge, MA: B.F.

Skinner Foundation

Turing, M. (1950). Computing Machinery and Intelligence. Mind, 59,

433-460, 1950. Last retrieved on April 4, 2022, from https://www.csee.umbc.edu/courses/471/papers/turing.pdf

Winograd, T. (1991). Thinking Machines: Can there be? Are We? In Sheehan, J., and Morton

Sosna, (eds). (1991). The Boundaries

of Humanity: Humans, Animals, Machines, Berkeley: University of California

Press.

Last retrieved on April 4, 2022, from http://hci.stanford.edu/~winograd/papers/thinking-machines.html

Zigler and V. Seitz. (1982). Thinking

Machines. Can There Bei Are Wei. In B.B.

Wolman, Handbook of Human Intelligence. Handbook of Intelligence: theories,

measurements, and applications. New York: Wiley

Zangwill,

O. L. (1987). ‘Binet, Alfred’, in R.

Gregory, The Oxford Companion to the Mind. p.

88

[1] “Rossumovi Univerzální

Roboti” can be translated as “Rossum’s Universal Robots” or RUR for short. The

Czech “robota” could be translated as “forced labor”. It

might hence be reasonable to assume that the term “robot” was coined in K.

Čapek’s early-Interbellum play “R.U.R”. It

could contextualizingly be noted that the concept of a humanoid, artificially thinking

automaton was first hinted at, a little more than 2950 years ago, in Volume 5

of “The Questions of Tāng” (汤问; 卷第五 湯問篇) of the Lièzǐ (列子); an important historical

Dàoist text.