As summarized by Patton, Sirotnik pointed at the importance of “equality, fairness and concern for the common welfare.” (1997, 1990) This is on the side of processes of evaluation, and that of the implementation of interventions (in education), through the participation by those who will be most affected by that what is being evaluated. These authors, among the many others, offer various insights into practical methods and forms of evaluation; some more or less participatory in nature.

With this in mind, let us now divert our gaze to the stage of “AI”-labeled research, application, implementation and hype. Let us then look deeper into its evaluation (via expressing ethical concern or critique).

“AI” experts, evaluators and social media voices warn and call for fair “AI” application (in society at large, and thus also into education).

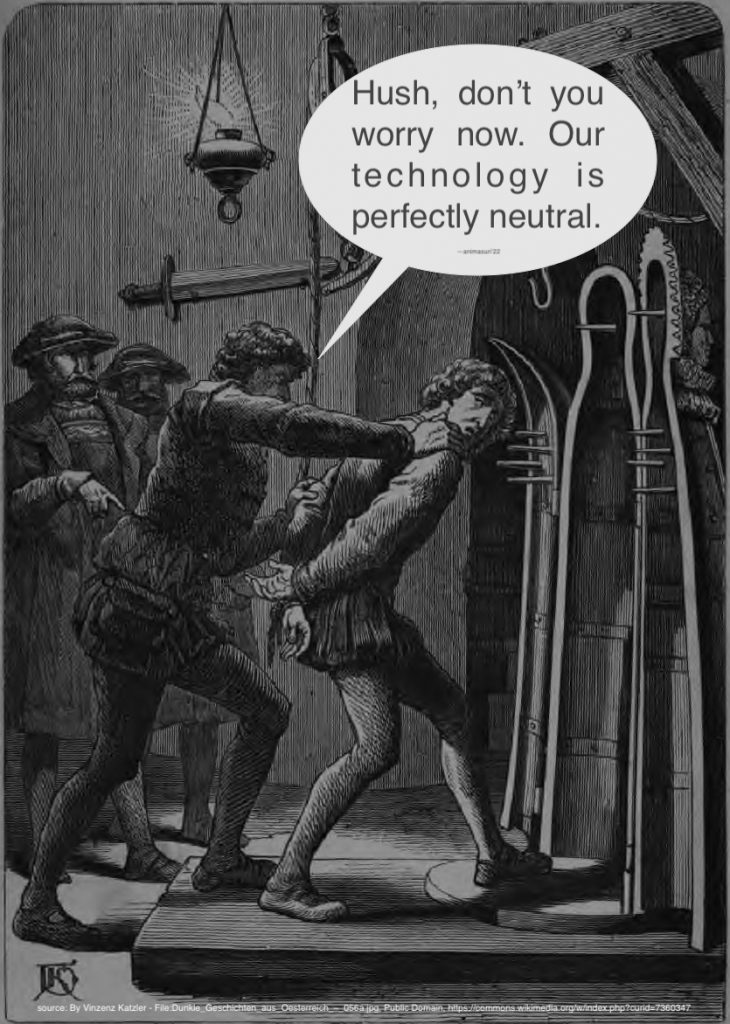

These calls occur while some are claiming ethical concerns related to fairness. Others are dismissing these concerns in combo with discounting the same people who voice such concerns. For an outsider, looking in on the public polemics, it might seem as “cut throat”. And yet, if we are truly concerned about ethical implications, and about answering needs of peoples, this violent image and the (de)relational acts it collects in its imagery, have no place. Debate, evaluate, dialog: yes. Debase, depreciate, monologue: no. Yes, it does not go unnoticed that a post as this one initially runs as a monologue, only quietly inviting dialog.

So long as experts, and perhaps any participant alike, are their own pawns, they are also violently placed according to their loyalties to very narrow tech formats. Submit to the theology of the day and thy shall be received. Voices are tested, probed, inducted, stripped or denied. Evaluation as such is violent and questionably serves that “common welfare.” (Ibid) Individuals are silenced, or over-amplified, while others are simply not even considered worthy to be heard, let alone tried to be understood. These processes too have been assigned to “AI”-relatable technologies (i.e., algorithmic designs and how messages are boosted or muted). So goes the human endeavor. So goes the larger human need in place of the forces of market gain and loudest noises on the information highways which we cynically label as “social.”

These polemics occur when in almost the same breathe this is kept within the bubble of the same expert voices: the engineer, the scientist, the occasional policymaker, the business leader (at times narrated as if in their own echo-chambers). The experts are “obviously” “multidisciplinary.” That is, many seem, tautologically, from within the fields of Engineering, Computer Science, Cognitive Science, Cybernetics, Data Science, Mathematics, Futurology, (some) Linguistics, Philosophy of Technology, or variations, sub-fields and combinations thereof. And yet, even there, the rational goes off the rails, and theologies and witches become but distastefully mislabeled dynamics, pitchforking human relations.

Some of the actors in these theatrical stagings have no industrial backing. This is while other characters are perceived as comfortably leaning against the strongholds of investors, financial and geopolitical forces or mega-sized corporations. This is known. Though, it is a taboo (and perhaps defeatist) to bundle it all as a (social) “reality.” Ethical considerations on these conditions are frequently hushed or more easily burned at the stake. Mind you, across human history, this witch never was a witch, yet was evaluated to better be known as a witch; or else…

In similarity to how some perceive the financially-rich –as taking stages to claim insight in any aspects of human life, needs, urgency, and decisions on filters of what is important– so too could gatekeepers in the field of “AI,” and its peripheries (Symbolic, ML or Neuro-symbolic), be perceived to be deciding for the global masses what is needed for these masses, and what is to be these masses’ contribution to guiding the benefit of “AI” applications for all. It’s fair and understandable that such considerations start there where they with insight wander. It is also fair stating that the “masses” can not really be asked. And yet, who then? When then? How then? Considering the proclaimed impacts of output from the field of “AI,” is it desirable that the thinking and acting stays there where the bickering industry and academic experts roam?

Let us give this last question some context:

For instance, in too broad and yet more concrete strokes: hardly any from the pre-18-year-old generations are asked, let alone prepared to critically participate in the supposedly-transformational thinking and deployment of “AI” related, or hyped, output. This might possibly be because these young humans are hardly offered the tools to gain insight, beyond the skill of building some “AI”-relatable deliverable. The techno-focused techniques are heralded, again understandably (yet questioned as universally agreeable), as a must-have before entering the job market. Again, to be fair, sprinkled approaches to critical and ethical thinking are presented to these youngsters (in some schools). Perhaps a curriculum or two, some designed at MIT, might come to mind. Nevertheless, seemingly, some of these attributes are only offered as mere echoes within techno-centrist curricula. Is this an offering that risks flavoring learning with ethics-washing? Is the (re)considering where the voices of reflection come from, are nurtured and are located, a Luddite’s stance? As a witch was, it too could be easily labeled as such.

AI applications are narrated as transforming, ubiquitous, methodological, universal, enriching and engulfing. Does ethical and critical skill-building similarly meander through the magical land of formalized learning? Ethical and critical processes (including computational thinking beyond “coding” alone), of thought and act, seem shunned and feared to become ubiquitous, methodological, enriching, engulfing or universal (even if diverse, relative, depending on context, or depending on multiple cultural lenses). Is this a systemic pattern, as an undercurrent in human societies? At times it seems that they who court such metaphorical creek are shunned as the village fool or its outskirt’s witch.

Besides youth, color and gender see their articulations staged while filtered through the few voices that represent them from within the above-mentioned fields. Are then more than some nuances silenced, yet scraped for handpicked data, and left to bite the dust?

Finally, looking at the conditions in further abstract (with practical and relational consequences): humans are mechanomorphized (i.e., seen as machines in not much more complexity than human-made machinery, and as an old computer dismissed if no longer befitting the mold or the preferred model). Simultaneously, while pulling in the opposite direction, the artificial machines are being anthropomorphized (i.e., made to act, look and feel as if of human flesh and psyche). Each of their applied, capitalized technique is glorified. Some machines are promoted (through lenses of fear-mongering, one might add) to be better at your human job or to be better than an increasing number of features you might perceive as part of becoming human(e).

Looking at the above, broadly brushed, scenarios:

can we speak of fair technologies (e.g. fair “AI” applications) if the gatekeepers to fairness are mainly those who create, or they who sanction the creation of the machinery? Can we speak of fair technologies if they who do create them have no constructive space to critique, nor evaluate via various vectors, and construct creatively through such feedback loops of thought-to-action? Can we speak of fair technology, or fair “AI” applications, if they who are influenced by its machinery, now and into the future, have few tools to question and evaluate? Should fairness, and its tools for evaluation, be kept aside for evaluation by the initiated alone?

While a human is not the center of the universe (anymore / for an increasing number of us), the carefully nurtured tools to think, participatorily evaluate and (temporarily) place the implied transformations, are sorely missing, or remain in the realm of the mysterious, the magical, witches, mesmerizations, hero-worship or feared monstrosities.

References:

Patton, M.Q. (1997). Intended Process Uses: Impacts of Evaluation, Thinking and Experiences. IN: Patton, M.Q. Utilization-focused Evaluation: The New Century Text (Ch. 5 pp 87 – 113). London: Sage.

Sirotnik, Kenneth A. (eds.). (1990). Evaluation and Social Justice: Issues in Public Education. New Directions for Program Evaluation, No. 45. San Francisco: Jossey-Bass.